Building a Secure, Hybrid NLP Chatbot for FinTech Compliance and Efficiency

This case study highlights how L&Q Technologies leveraged advanced NLP and a strategic hybrid LLM architecture to deliver a high-performance, confidential AI agent for a major FinTech client.

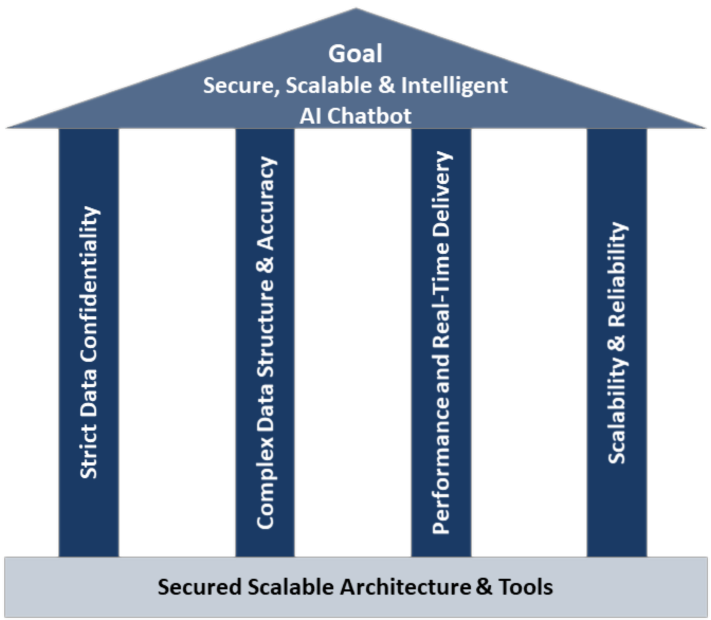

1. The Challenge: Confidentiality Meets Complexity

Our FinTech client needed a powerful AI chatbot for querying proprietary data. The project required an intelligent solution capable of delivering real-time access to complex internal information while meeting non-negotiable FinTech standards for confidentiality and data security. Crucially, the architecture we designed had to ensure all data was stored and processed exclusively within the secured zone.

-

Strict Data Confidentiality: The primary constraint was ensuring confidential and proprietary data remained fully isolated, preventing any exposure to external or public-facing AI models.

-

Complex Data Structure & Accuracy: The system required sophisticated query mechanisms to handle complex structured and unstructured data accurately, moving beyond simple keyword matching.

-

Performance and Real-Time Delivery: The solution demanded highly reliable, real-time responses to maintain a seamless user experience, necessitating fast processing and minimal latency.

-

Future-Proof Scalability & Reliability: The architecture needed to manage the existing extensive reference content while guaranteeing future horizontal scalability to handle growing data volumes and user demands.

These challenges demanded an architecture that could fundamentally rethink AI deployment. Solving the conflict between uncompromised security and high-performance intelligence required us to move beyond standard models and engineer a truly strategic, customized solution.

2. L&Q’s Strategic Solution: Hybrid LLM Architecture

We delivered a multi-layered approach focused on security, precision, and speed.

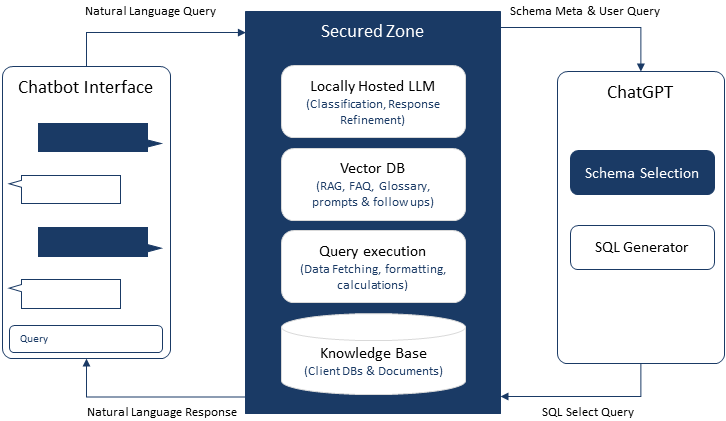

A. Secured-Zone Data Handling

We engineered a strictly secured-zone for data processing and query execution. This foundational requirement ensured that all internal database schemas and documents were queried and processed without ever leaving the client's secured environment. This met the stringent security and confidentiality requirements (like GDPR/CCPA) necessary for the FinTech domain.

-

Secure Input: The user's Natural Language Query is passed securely to the Locally Hosted LLM within the Secured Zone.

-

External Model Isolation: The Knowledge Base (Client DBs & Documents) is never exposed to external models like ChatGPT.

-

Schema Transmission Only: Only non-sensitive data—the Schema Meta and the User Query—is safely sent to ChatGPT for complex NLP logic and SQL generation.

-

Local Execution and Refinement: The resulting SQL Select Query is returned to the Secured Zone where the Query Execution and Locally Hosted LLM handle the actual data fetching, formatting, and final response refinement before delivery to the user.

In essence, this architecture guarantees compliance: By isolating the client's knowledge base and proprietary data within the Secured Zone, we eliminate the primary regulatory risk associated with external LLM usage, delivering a high-performance AI solution that meets the highest standards of FinTech confidentiality.

B. Adaptive, Intuitive User Experience (UX)

We built an adaptive UX that guides users with smart suggestions, reducing friction and speeding up discovery.

-

Intelligent Guidance: The system provides intuitive follow-up/suggestion prompts that allow users to easily navigate and refine their query path to the exact information they are seeking.

-

Personalized Query History: Users gain efficiency by easily accessing, editing, and fine-tuning previous questions in the current session, alongside search access to historical conversation sessions.

-

Dynamic Knowledge Base: Facilitates accurate query resolution by providing access to predefined FAQs, Glossary, and documentation, supplemented by auto-generated FAQs based on user activity.

-

Platform Integration: The chatbot is fully linked to the customer platform, allowing result sets to be directed to relevant application modules, such as reports and interactive charts.

By delivering intelligent guidance and leveraging personalized data history, this UX architecture successfully solved the Complex Information Access challenge. The result is a seamless, highly intuitive customer experience that significantly boosts engagement and trust in the platform.

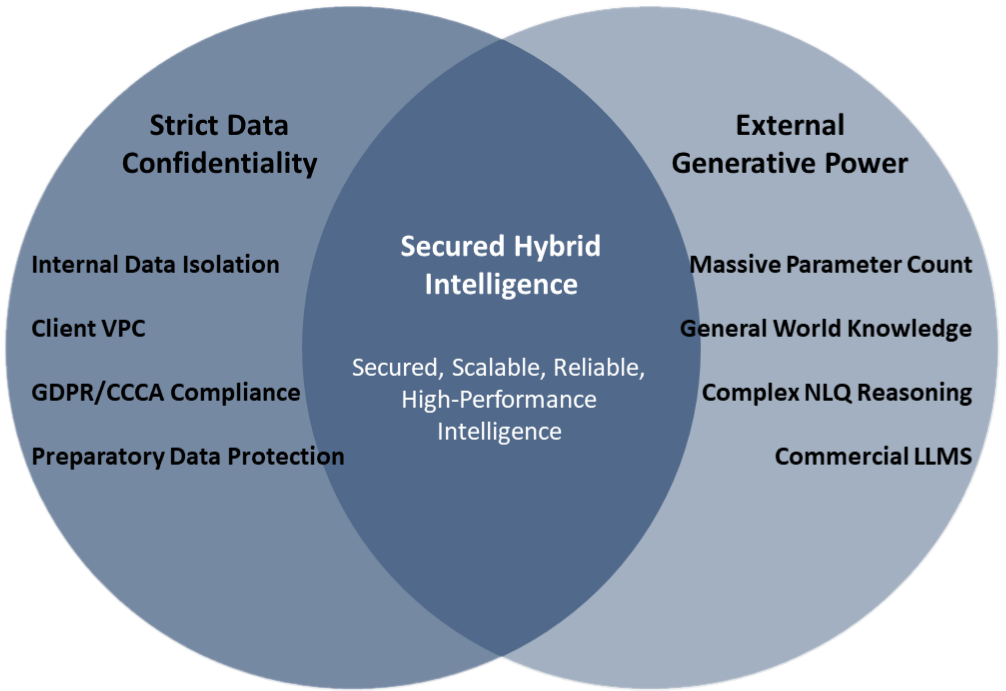

C. Strategic Hybrid Model Implementation

The ultimate solution to the security and performance challenge was deploying a strategic hybrid model. This architecture allowed us to leverage the massive processing power of proven commercial AI models while strictly isolating the client's sensitive data behind a securely hosted, fine-tuned Large Language Model (LLM).

-

Proven Commercial Model (ChatGPT/Gemini): We leverage the intelligence of external models primarily for complex tasks like understanding the database schema and accurately generating the complex SQL queries, ensuring optimization and reliability.

-

Fine-Tuned Local LLM: The local model enforces critical controls such as Classification, Guardrails, and Ethics checks. It also handles Natural Language Response processing and Follow-up Prompt generation, ensuring the final user experience is refined and secure.

By strategically blending internal security and external performance capabilities, this hybrid architecture solved the inherent conflict of the project. It ensures the client benefits from the latest AI advancements while maintaining 100% control and compliance over their proprietary data assets.

3. Results & Value Realization

The successful delivery of this project demonstrated our ability to apply cutting-edge AI/ML to solve real-world, high-stakes business problems. The resulting Hybrid Chatbot platform delivered three key areas of value:

-

Guaranteed Data Confidentiality & Compliance: The secure-zone architecture provided the client with complete confidence that proprietary and confidential data would never be exposed to external services, mitigating massive regulatory and business risk in the FinTech sector.

-

Enhanced Operational Efficiency: The intelligent, adaptive chatbot significantly reduced the time employees and customers spent searching complex internal documents, leading to faster decision-making and streamlined internal workflows .

-

Proven Platform Reliability: The Hybrid LLM model delivered a highly resilient and performant solution, demonstrating high availability and validating L&Q's ability to integrate specialized AI agents into critical, high-demand enterprise systems.

Key Technologies Used

LLMs & Models:

Mistral7B, OpenAI (for the Commercial Model)

NLP & Retrieval:

NLTK, Punkt, FAISS, and Vector DB (for RAG implementation)

Architecture:

Hybrid LLM Architecture, Secured Data Zone

Domain:

FinTech, Enterprise Systems

4. Conclusion and Next Steps

The true power of AI comes from strategic, secure design. By pairing external language capability with a local, guarded LLM, we turned a potential risk into a competitive advantage.

L&Q’s hybrid LLM architecture delivers high-performance intelligence while maintaining 100% data confidentiality.

Ready to transform your data challenges into a market-leading AI solution? Contact our strategy team today.